I’m struggling to get reliable outputs from Gpt Zero for AI detection and sometimes the tool gives false positives or misses generated content. I need advice on steps to improve its accuracy or any user tips that ensure better detection. Any help or settings suggestions would be appreciated.

Oh man, you’re not alone! Gpt Zero’s supposed to be this big brain for AI detection, but half the time it’s like playing whack-a-mole: spot the bot, miss the bot, hope for the best. Here’s my rundown after hammering at it for weeks on end:

- Short input? Forget it. Gpt Zero needs longer passages to work well. Feed it 1-2 sentences and it’ll just shrug. Try whole essays or articles for better accuracy.

- Complex language trips it up. If the writing is super formal or uses overly simple sentences, Gpt Zero sometimes cries “AI!” even if it’s really human. Mix up the sentence structure a bit if you’re testing.

- Multiple sources play tricks. Blend human and AI writing in one doc, and the tool can get seriously confused. Run smaller chunks separately if possible.

- Don’t trust it as gospel. Take its verdict with a grain of salt; it gives false positives and negatives—like, a LOT.

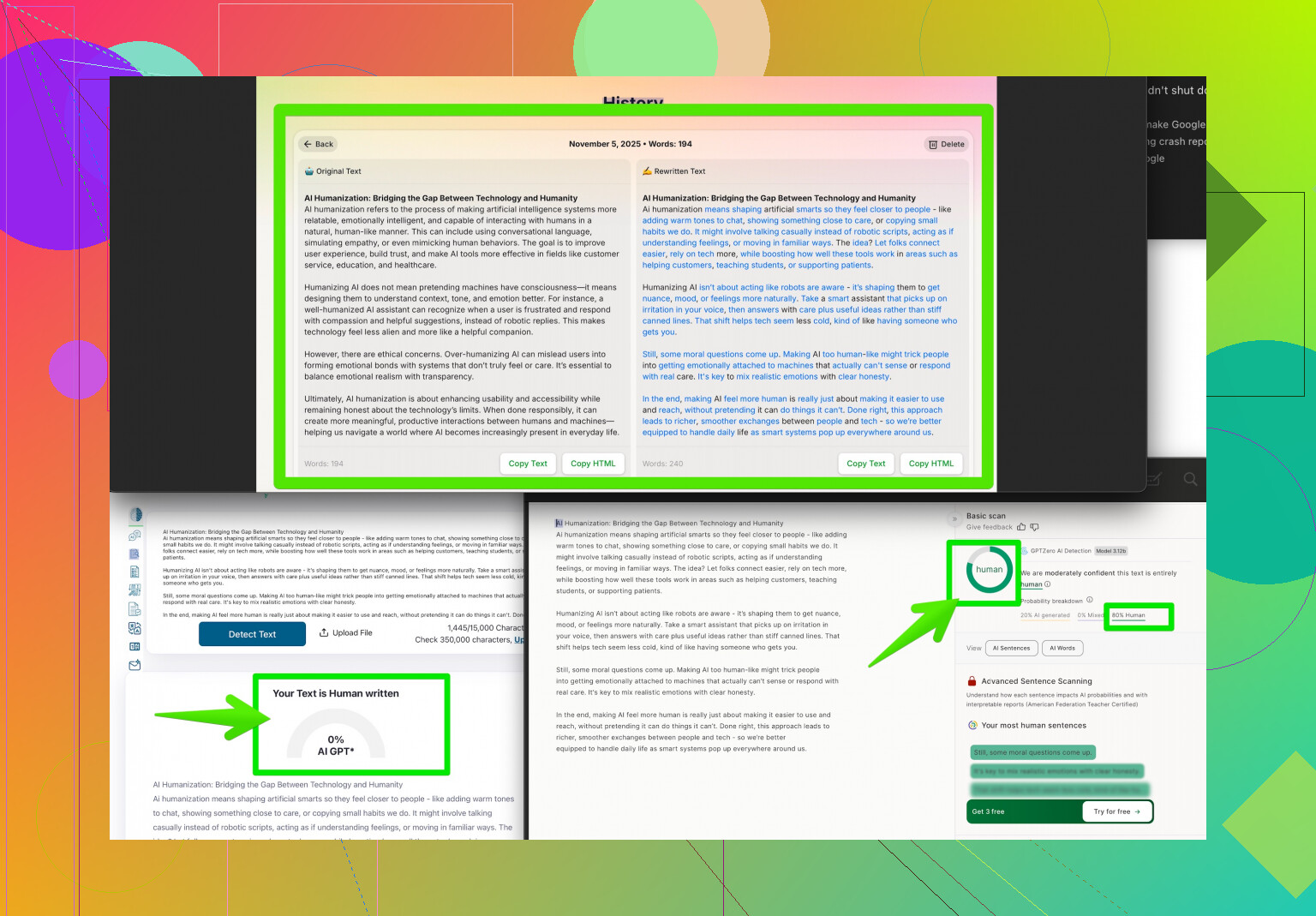

- Paraphrasing helps. If you’re trying to bypass detection or just want more ‘human’-like output, tools like Clever AI Humanizer are a game changer. It rewrites text in a way that fools not just Gpt Zero, but most AI detectors. You can check out the details by clicking how to make your writing sound more human.

Long story short: Gpt Zero is decent for flagging obvious AI, but it will never be perfect. Always cross-check with other tools and, if you really need that human vibe, use a humanizer like Clever AI Humanizer to stay ahead of the game. Hope that doesn’t sound too cynical, just battle scars talking!

If I’m being honest, Gpt Zero plays fast and loose with what it thinks is AI. I agree with @viajantedoceu on some stuff, but I’ve actually gotten better results by USING the tool kinda like a lie detector – always skeptical. You can’t just slam your text in and trust whatever it spits out.

Try running the same content through multiple detectors (Originality, Turnitin’s AI checker, Copyleaks, Winston, etc.) and look for a consensus. If three different tools are yelling “ROBOT!” then you probably got some AI going on. But don’t chase a 100% yes/no, because honestly, none of these tools are at the holy grail stage.

One thing I do, which maybe goes against the grain, is I try “chunking” not by splitting things super small, but by breaking at logical paragraphs. Context matters—if you make it too short, nuance is lost; too long and, yeah, you get your word soup false positives.

Also worth noting: some detectors (including Gpt Zero) really hate generic intros or overly slick transitions. If you’re only tweaking small bits or using really formulaic academic phrases, it often spikes the AI probability.

Real talk though? If you want a pro tip that works, after testing a bunch of stuff, nothing is more effective than rewriting with a tool like Clever AI Humanizer. Not only does it scramble up syntax and flow, it makes things actually readable and human-sounding. Way better than just switching words around.

And for those who want some extra Reddit wisdom, check out this helpful thread about strategies to make your writing sound more human and bypass AI detectors. It’s loaded with community-driven hacks and some eye-rolling fails.

Lastly, if you’re relying on any AI detector as the final judge, you’re setting yourself up for headaches. Always verify with your own eyes—or, even better, a human editor—before making any big decisions. Don’t make Gpt Zero your only cop on the beat.

Let’s break this into real talk, no-nonsense style. Gpt Zero is hit or miss—everyone in this thread already spotted that. But here’s a curveball: sometimes the problem isn’t just the tool or the way you format chunks, but the entire idea of depending on statistical detectors for something human is, well, fundamentally flawed. No AI detector will nail it 100% because writing is way too diverse for strict pattern-matching.

You’ve heard chunk with logic, feed it longer bits, check multiple tools (like Winston, Copyleaks, or Turnitin’s detector). Solid. But try this: layer up your approach. After you’ve run text through Gpt Zero and a couple of its competitors, do your own forensic analysis. Read aloud—awkward phrasing, repetitive structure, or robotic transitions stand out immediately in speech. That’s step one, and frankly, human instinct still beats the bots.

If you need to pass AI detection, yes, the Clever AI Humanizer comes up often for a reason: it doesn’t just paraphrase but actively churns out writing that’s purposefully less predictable. That’s a plus—gives you the edge against basic detectors and even trips up stricter ones sometimes. However, it’s not a magic bullet: output can occasionally go off the rails (over-humanized, odd phrasing, maybe misses your tone entirely), so always review before submitting or publishing. It also takes a bit more time than quick synonym tools. Compared to offerings from the others mentioned in the thread, it generally outperforms in ‘passing’ scores but can sacrifice factual accuracy on dense topics.

Look, if absolute reliability is your goal, supplement everything with peer or editorial review. No tool can guarantee peace of mind, but the right sequence—detector cross-check, human-over, smart rewriting (Clever AI Humanizer for the win), and self-audit—will cover most bases. Just don’t get too comfortable with any one solution because these detectors evolve as quickly as the AI writers themselves!