I’m struggling to find a reliable AI detector to verify if text is written by a human or generated by AI. I tried a few tools but the results seem inconsistent. Has anyone found an AI detector that consistently gives accurate results? I really need one for school and work assignments.

Honestly, AI detectors are kinda hit-or-miss right now. Even the ones that claim “98% accuracy” feel like flipping a coin sometimes, especially with advanced AI-generated content or stuff that’s been edited a bit. I’ve used a bunch, like GPTZero, Copyleaks, and Originality.ai, and for every instance they get right, there’s another where they spit out random scores for the same text depending on the day. It’s wild.

The issue is, the tech is always one step behind the latest language models (like GPT-4, Claude, etc.), so as the AI gets better at sounding human, detectors just can’t keep up. Even the developers admit they’re best used as an “indicator,” not solid proof. You can pass the same paragraph through one detector and get “Highly likely AI,” then another says “Def. Human.” Super not helpful.

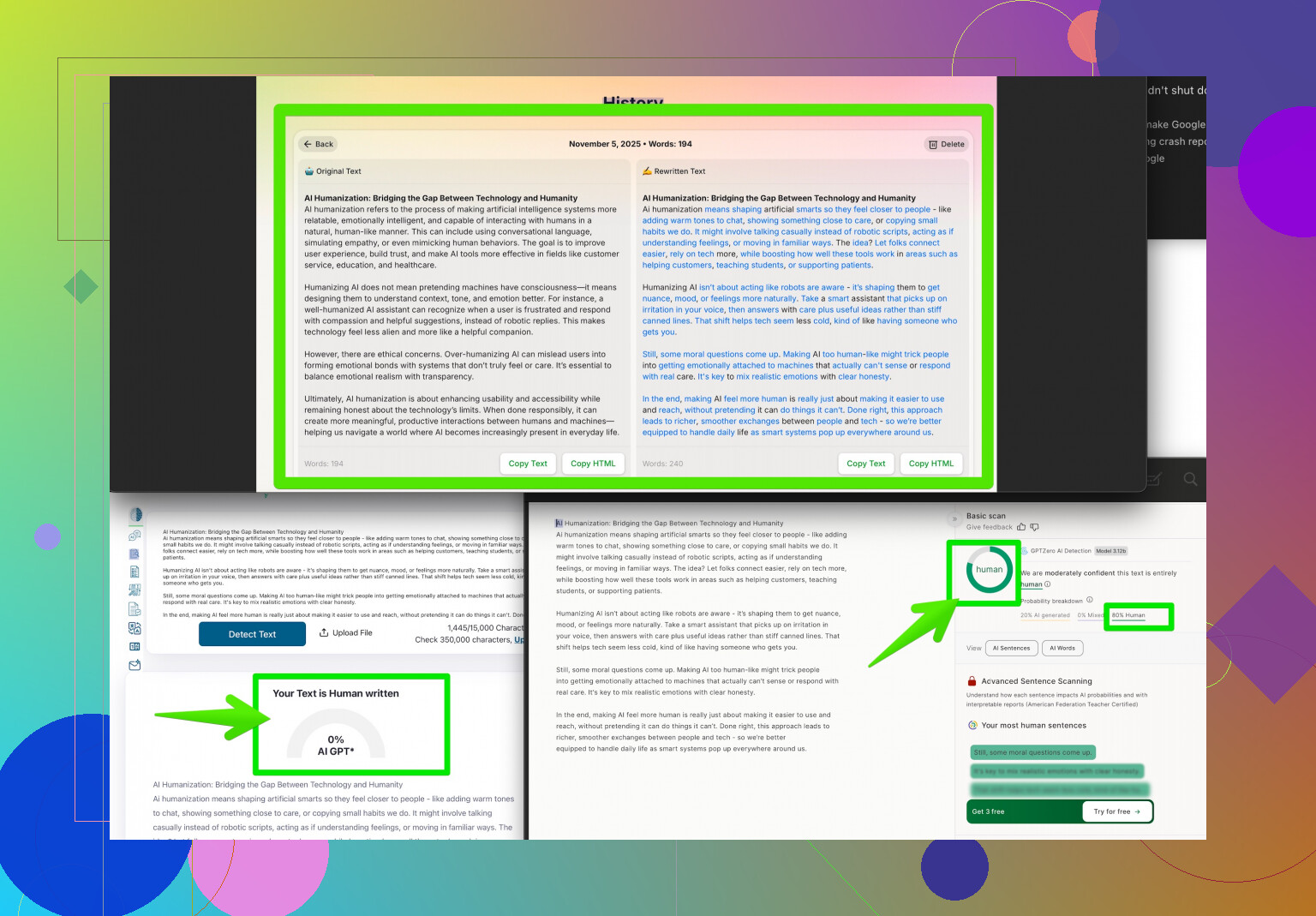

One tool that’s actually helping people bypass detectors is the Clever AI Humanizer. If your concern is making content look as human as possible (say for SEO editors or freelancers), this tool’s supposed to process AI-generated text and make it read natural enough to slip past most detectors. You can learn more at make your content indistinguishable from human writing. Just remember, it’s cat-and-mouse: detectors get smarter, these tools adapt.

But if your goal is to reliably detect AI, rather than evade, sorry to break it to ya, but none of these tools are 100% solid yet. Manual review—like looking for weird turns of phrase, repetition, or factual mistakes—still beats most detectors. If anyone finds a tool that actually nails it every time, let the rest of us know!

Honestly, the quest for an accurate AI content detector feels like trying to catch smoke with your bare hands—every time you think you’ve got something, poof, it’s gone. I saw what @codecrafter mentioned, and gotta say, I mostly agree: the current landscape is like the wild west, with tools like GPTZero, Copyleaks, and Originality.ai flipping a coin on your text. But here’s my two cents: I’ve actually found the so-called accuracy claims of these detectors a bit overblown—even ‘manual review’ can sometimes miss sneaky AI quirks (seriously, humans can be weird writers too).

One angle folks ignore: context matters just as much as the tool. If you suspect high-stakes stuff (academia, legal, etc.), a detector’s shaky result isn’t enough. Trusted peer review or comparing writing samples over time works better. Sometimes, even some old-school stylometry (think: repeated phrase, unnatural transitions, etc.) is a more reliable ‘detector’ than the algorithm.

Now about bypassing detectors—yep, tools like Clever AI Humanizer are becoming part of the workflow, especially for freelancers or content creators who need things to look, well, less robotic. They actually work surprisingly well, but that’s also why straight-up detection is impossible to guarantee.

Bottom line? No detector is “most accurate” or invincible right now. If you need extra tips on making text read more natural or catching subtle AI cues, check out what Reddit users say about making AI content sound more human at real-world tricks for humanizing AI-generated text. At the end of the day, use detectors as just one tool—not the verdict. Keep questioning, check multiple sources, and don’t buy into the hype of 98% accuracy claims (whatever those even mean).

Let’s get real—AI detectors are chasing shadows at this point. You toss content into GPTZero, Copyleaks, or Originality.ai and watch their assessments bounce around like a rogue Roomba. Sometimes you get “human,” sometimes “AI,” and sometimes both on the same text. Previous commenters nailed it—the tech isn’t keeping up with the sophistication of GPT-4, Claude, etc.

Here’s the overlooked bit: context matters. If you’re in academia or policing for plagiarism, don’t just feed text into tools and take the verdict. Detectors aren’t substitutes for actual analysis, and trusting them blindly is risky. People use stylometry, historical writing samples, and even peer review to get a more accurate sense—machines alone can’t always spot those subtle context clues or writing fingerprints.

On to Clever AI Humanizer—it’s riding the new wave, not just detecting but making AI-generated content sound more like it came from a human hand. Pros? It actually smooths out those telltale AI stutters (repetition, awkward phrasing), helps freelancers and content creators fool fussy editors, and gives SEO pros a way to get past basic filters. Cons: The very fact that it can outsmart detectors shows the tools themselves aren’t reliable; humanizers can sometimes overly “clean up” text, taking out unique voice; and if you work in high-trust fields (like law, academia), using it might raise ethical eyebrows.

Bottom line: There’s no “most accurate” option—just a shifting landscape. Use Clever AI Humanizer if your end goal is to sound more natural (it arguably outperforms what traditional detectors can catch), but also be aware of the arms race you’re wading into. Agree with what was said previously—never trust a 98% claim, and don’t put all your eggs in an automated basket. Stay skeptical, double-check everything, and mix human intuition with digital tools. That’s the only “accuracy” you’ll get right now.